You don’t need to spend a dime on fancy AI code review platforms to get powerful results.

With just a few lines of Bash and access to an AI API, you can build your own automated reviewer that runs right inside your CI pipeline.

You can find functional example code in the following demo repository:

ChatGPT Wrappers Everywhere

Most commercial AI review tools are just wrapping the same API calls you can make yourself — but they add price tags, dashboards, and restrictions you might not even need. When you roll your own setup, you stay in control:

- You decide what’s analyzed and how it’s reported.

- You know exactly where your code and data go.

- You can customize the AI prompts, rules, and thresholds to match your workflow.

DYI AI Review Overview

Let’s break down how an AI reviewer actually works under the hood. It’s simple, just three moving parts connected by your CI pipeline.

- File selection: choose the files to analyze. Usually changed files in a PR.

- AI Review: send changed files to the AI API endpoint with a suitable prompt.

- Results parsing: read the results and take some action. For example, log them in a dashboard for further review or fail the CI pipeline if there are just too many errors.

Step 1: File Selection

Your AI reviewer doesn’t need to analyze the entire codebase. We can only focus on what changed in the PR.

In most CIs you can use Git to grab the list of modified files in a pull request.

For example, on Semaphore, you can do it in one line, thanks to SEMAPHORE_GIT_COMMIT_RANGE:

git diff --name-only "$SEMAPHORE_GIT_COMMIT_RANGE"

This command outputs the paths of every file modified between the base and head commits of the PR or the push that triggered the pipeline. You can then filter them by extension (*.js, *.py, etc.) or directory (e.g. src/) before sending them to the AI.

Step 2: Ask Your AI

Next, send those changed files to your favorite AI model like OpenAI or Anthropic.

A few lines of Bash and curl are enough. For example for OpenAI:

curl https://api.openai.com/v1/responses \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "gpt-4o-mini-2024-07-18",

"input": [

{"role": "system","content":[{"type":"input_text","text":"You are a senior code reviewer. Respond in JUnit XML."}]},

{"role": "user","content":[{"type":"input_text","text":"Review these files:"}]}

],

"temperature": 0

}'You can tune the prompt to match your priorities. For instance: security, performance, maintainability, documentation, or anything else. Just make sure to request a structured output, like JSON or JUnit XML, so you can parse it automatically in the next step.

Step 3: Process the Result

Once the AI sends its response, parse it in CI to make the output actionable.

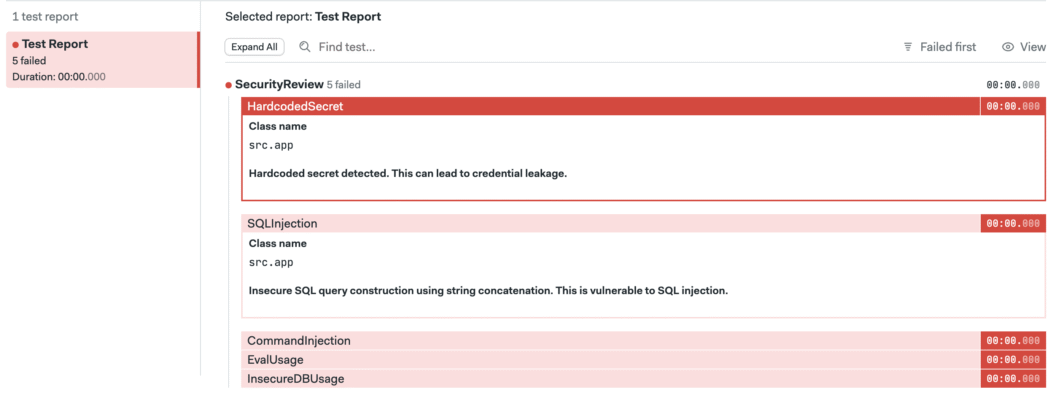

For example, if the AI returns JUnit XML, you can use Semaphore’s built-in test reports dashboard to visualize findings.

Alternatively, you can even add a threshold rule that fails the pipeline if too many issues are found.

Next Steps

Building your own AI code review can be fun, practical, and a low-cost way to bring intelligent automation into your CI pipeline. You decide what’s analyzed, how the feedback looks, and what happens when issues are found. Think of this as the start of the journey. It’ll will take some time and work to get to point you’re satisfied with the review, but it will be worth it.

If you’re ready to try it, check out the example repository linked below as a starting point for your experiments.

Thank you for reading and happy building!

Want to discuss this article? Join our Discord.